The Story Behind Abstractive Summarization

Information overload is a real issue today. News consumption is often overwhelming. We are bombarded with articles daily. Sifting through it all is time-consuming. Abstractive text summarization offers a solution. It helps us digest news efficiently.

Imagine quickly grasping news essence. Instead of reading lengthy articles. Abstractive summarization creates concise summaries. These are not just extracts of original text. They are newly phrased, shorter versions. This is the power of abstractive methods.

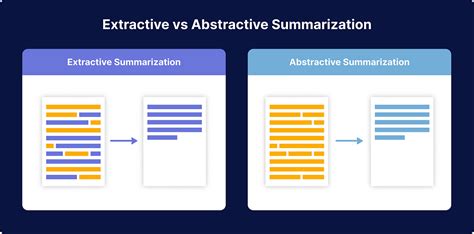

Extractive summarization simply picks sentences. It copies them from the original article. Abstractive summarization is different. It understands the source text’s meaning. Then, it generates new sentences to summarize it. This approach mirrors human summarization.

What Makes Abstractive Summarization Tick?

Abstractive text summarization is a process. It condenses information from a source. The output is a shorter, abstract summary. Crucially, this summary contains novel phrases. It isn’t just copied text. Think of it like a human retelling a story.

The core idea involves understanding text meaning. Then, re-expressing it concisely. This goes beyond simple keyword extraction. It requires natural language processing (NLP). NLP techniques enable machines to “read” and “write”.

For news articles, this is incredibly useful. Readers can quickly grasp key points. Journalists can automate summary generation. Researchers can analyze news trends efficiently. Abstractive summarization is transforming news processing.

Deep Learning Models

Deep learning powers modern NLP. It has revolutionized abstractive summarization. Neural networks are the key component. These networks learn complex patterns in data. They mimic human learning processes.

Specifically, transformer networks are dominant now. Models like BERT and GPT excel. They handle long-range dependencies in text. This is crucial for understanding context. Transformers have pushed boundaries of NLP.

Encoder-decoder architectures are common. The encoder reads the input text. It creates a compressed representation. The decoder then generates the summary. This two-stage process is highly effective. It allows for nuanced text generation.

How It Actually Works

Data preprocessing is the first step. News articles are often messy. Cleaning and formatting are essential. This includes removing noise, like HTML tags. Tokenization breaks text into smaller units.

Model training is computationally intensive. Large datasets of news articles are used. The model learns to map articles to summaries. Optimization algorithms refine model parameters. This is a crucial phase for performance.

Inference is the summary generation stage. A trained model takes a new article. It applies learned patterns to create a summary. Decoding algorithms generate fluent text. This process happens quickly and automatically.

Why Abstractive Summarization Matters Today

For readers, abstractive summaries save time. They offer quick insights into news. Busy individuals can stay informed efficiently. Mobile news consumption benefits greatly. Summaries fit small screens perfectly.

News organizations gain efficiency too. Automated summarization aids content creation. It can speed up news dissemination. It allows journalists to focus on in-depth reporting. This enhances productivity.

For research, abstractive text summarization is a hot topic. It drives NLP advancements. Researchers explore new model architectures. They improve summary quality and factuality. This field is rapidly evolving.

A Head-to-Head Comparison

Several techniques exist for abstractive summarization. Recurrent neural networks (RNNs) were early approaches. They process text sequentially. However, they struggle with long texts. Transformers address this limitation.

Transformers, like BART and T5, are state-of-the-art. They use attention mechanisms effectively. Attention allows the model to focus on relevant parts. This improves context understanding. They show superior performance.

Pre-trained language models are also impactful. These models are trained on massive text corpora. Fine-tuning them for summarization is efficient. They bring pre-existing knowledge to the task. This often leads to better results.

| Technique | Advantages | Disadvantages |

|---|---|---|

| RNNs | Sequential processing, simpler to implement | Struggles with long texts, vanishing gradients |

| Transformers | Handles long texts, attention mechanism | Computationally intensive, complex |

| Pre-trained Models | Leverage existing knowledge, efficient fine-tune | Can be biased by pre-training data |

The Easy Way to Evaluate Summaries

Evaluation is critical in research. We need to measure summary quality. Automatic evaluation metrics are commonly used. ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is popular. It compares generated summaries to reference summaries.

ROUGE metrics calculate overlap. Specifically, n-gram overlap between summaries. ROUGE-1 measures unigram overlap. ROUGE-2 measures bigram overlap. ROUGE-L considers longest common subsequence. Higher ROUGE scores indicate better summaries.

Other metrics exist beyond ROUGE. BLEU (Bilingual Evaluation Understudy) is used. It is also based on n-gram precision. METEOR considers synonyms and stemming. Human evaluation is still vital. It assesses fluency and coherence directly.

| Metric | Description | Advantages | Disadvantages |

|---|---|---|---|

| ROUGE | Recall-oriented n-gram overlap | Widely used, automatic, easy to calculate | May not correlate perfectly with human judgment |

| BLEU | Precision-oriented n-gram overlap | Automatic, common in machine translation | Less recall-focused than ROUGE |

| METEOR | Considers synonyms, stemming, word order | More nuanced than ROUGE, better human correlation | More complex to calculate |

The Research Frontier

Abstractive text summarization news articles research paper is a dynamic field. It is an active area of investigation. Researchers are constantly seeking improvements. The goal is to create human-quality summaries.

Challenges remain despite progress. Ensuring factual accuracy is crucial. Summaries should not introduce misinformation. Maintaining coherence and readability is also vital. The text should flow naturally.

Current research explores several directions. Improving factuality is a key focus. Methods to verify summary content are being developed. Contextual understanding is also enhanced. Models are learning deeper semantic relationships. This field is rapidly advancing.

The Roadblocks to Perfection

Factual accuracy is a major hurdle. Abstractive models can sometimes hallucinate. They may generate incorrect information. This is especially problematic for news. Reliability is paramount.

Coherence and readability are ongoing challenges. Summaries need to be fluent and logical. They should read like naturally written text. Maintaining topic flow is crucial for understanding. This requires sophisticated language generation.

Computational resources are a practical limitation. Training large models is expensive. It requires significant computing power. This can restrict accessibility to advanced techniques. Efficient models are needed.

| Challenge | Description | Potential Solutions |

|---|---|---|

| Factual Accuracy | Summaries may contain incorrect information | Fact verification methods, knowledge integration |

| Coherence & Readability | Summaries may be disfluent or illogical | Improved decoding algorithms, better training data |

| Computational Cost | Training large models is resource-intensive | Model compression, efficient architectures |

A Deep Dive

This exploration has traversed the landscape of abstractive text summarization. We’ve uncovered the secrets behind its workings. From the story of information overload to the magic of deep learning. We examined techniques and evaluations.

The importance of abstractive text summarization news articles research paper is clear. It offers solutions for efficient news consumption. It empowers news organizations and fuels research. This technology is transforming information access.

Looking ahead, abstractive summarization promises even greater impact. Continued research will refine models further. We anticipate more accurate, coherent, and accessible summaries. The future of news processing is being shaped now.

- 5 Secrets To Supercharge Your Mind? - March 5, 2025

- 7 Secrets of Free AI Summarizers - March 5, 2025

- 5 Proven Benefits VS Myths - March 5, 2025